In continuing with part 2 of this series, I’m going to discuss zoning requirements for SANcopy on the XtremIO. To recap before we begin, I have a VMware environment that I am migrating from VNX to XtremIO. Most of this environment can be migrated via storage vMotion to the XtremIO. However, there are quite a few of VMs that have physical mode RDMs that need to be migrated via SANcopy. We chose SANcopy over Open Migrator because these following reasons:

- SANcopy enabler is installed on the source VNX

- SANcopy will require one outage to shutdown the server on time of cutover

- SANcopy is array-based and would not impact the host CPU

- Open Migrator is only supported for Microsoft Windows Server

- Open Migrator requires three reboots to migrate (one to attach filter driver to source and target drives, two to actually cutover one drives are in sync, and three to uninstall the software)

First things first; we need to zone our target XtremIO to the source VNX. With following EMC Best Practices, we will create 1-to-1 zones on each Fabric for SP A and SP B ports to two controllers.

Fabric A

| Zones | Source VNX | Target XtremIO |

| Zone 1 | SP A-port 5 | X1-SC1-FC1 |

| Zone 2 | SP A-port 5 | X1-SC2-FC1 |

| Zone 3 | SP B-port 5 | X1-SC1-FC1 |

| Zone 4 | SP B-port 5 | X1-SC2-FC1 |

* SP A-port 5 and SP B-port 5 are connected to Fabric A in my environment*

Fabric B

| Zones | Source VNX | Target XtremIO |

| Zone 1 | SP A-port 4 | X1-SC1-FC2 |

| Zone 2 | SP A-port 4 | X1-SC2-FC2 |

| Zone 3 | SP B-port 4 | X1-SC1-FC2 |

| Zone 4 | SP B-port 4 | X1-SC2-FC2 |

* SP A-port 4 and SP B-port 4 are connected to Fabric B in my environment*

You should end up with zones that look something like this:

zone name XIO3136_X1_SC1_FC2_VNX5500_SPA_P4 vsan 200

member fcalias XIO3136_X1_SC1_FC2

member fcalias VNX_SPA_P4

exit

zone name XIO3136_X1_SC2_FC2_VNX5500_SPA_P4 vsan 200

member fcalias XIO3136_X1_SC2_FC2

member fcalias VNX_SPA_P4

exit

zone name XIO3136_X1_SC1_FC2_VNX5500_SPB_P4 vsan 200

member fcalias XIO3136_X1_SC1_FC2

member fcalias VNX_SPB_P4

exit

zone name XIO3136_X1_SC2_FC2_VNX5500_SPB_P4 vsan 200

member fcalias XIO3136_X1_SC2_FC2

member fcalias VNX_SPB_P4

exit

Yes… yes… I know I used the acronym XIO (XIO is not XtremIO) for my fcalias and zone names. Sorry! 🙂

You can choose to split this across multiple bricks if you have more than one brick in your XtremIO cluster. Even though, you really only need to zone one storage controller at a minimum, we are choosing to zone two controllers and will split the SANcopy sessions across the two controllers to balance out the load.

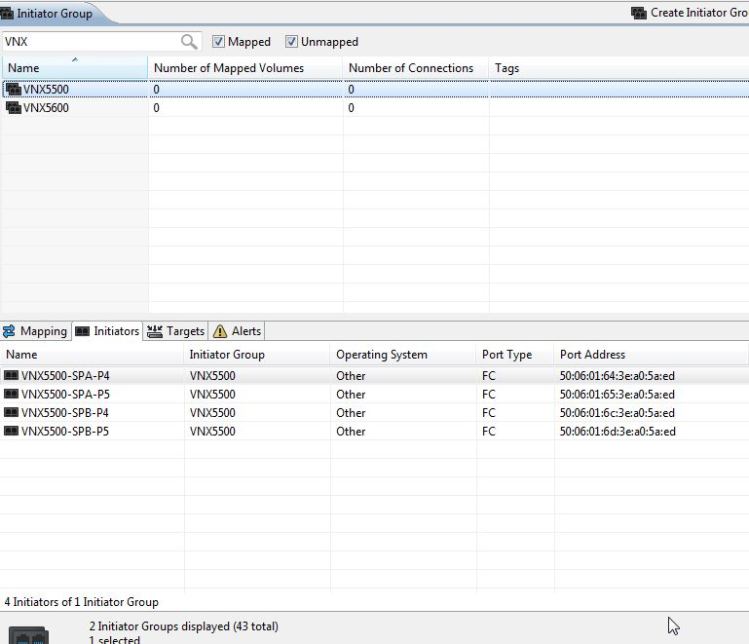

Once we have our zoning in place, we should now see the VNX visible from the XtremIO. You can view this in the CLI by issuing the show-discovered-initiators-connectivity command or in the GUI by creating a new initiator group for the VNX and selecting the drop down to show the SP A and SP B WWPNs. Create a new initiator group on the XtremIO for the VNX and map the target volumes for the SANcopy session to this initiator group. Take note of the HLU you assigned to the volume mapping and also the target FC ports on the XtremIO you zoned to the VNX.

xmcli (admin)> show-discovered-initiators-connectivity

Discovered Initiator List:

Cluster-Name Index Port-Type Port-Address Num-Of-Conn-Targets

ATLNNASPXTREMIO01 1 fc 50:06:01:61:08:60:10:60 2

ATLNNASPXTREMIO01 1 fc 50:06:01:62:08:60:10:60 2

ATLNNASPXTREMIO01 1 fc 50:06:01:64:3e:a0:5a:ed 2

ATLNNASPXTREMIO01 1 fc 50:06:01:65:3e:a0:5a:ed 2

ATLNNASPXTREMIO01 1 fc 50:06:01:69:08:60:10:60 2

ATLNNASPXTREMIO01 1 fc 50:06:01:6a:08:60:10:60 2

ATLNNASPXTREMIO01 1 fc 50:06:01:6c:3e:a0:5a:ed 2

ATLNNASPXTREMIO01 1 fc 50:06:01:6d:3e:a0:5a:ed 2

The next part of this guide will discuss what is needed on the VNX source before SANcopy sessions can be created. We are going to talk about reserved LUN pool, requirements around that, and creating the SANcopy session itself. Stay tuned!